AI Image Generation: How Does Work?

Artificial Intelligence (AI) has revolutionized numerous industries, and one of its most captivating applications is in image generation. From creating realistic human faces to producing surreal artworks, The ability to AI Image Generation has opened new avenues in art, design, and technology. This article delves into the mechanisms behind AI-generated images, the models that power them, and the broader implications of this technology.

Understanding the Basics: How Does AI Image Generation Work?

What Are Generative Models?

Generative models are a class of AI algorithms that can create new data instances resembling the training data. In the context of image generation, these models learn patterns from existing images and use this knowledge to produce new, similar images.

The Role of Neural Networks

At the heart of AI image generation are neural networks, particularly deep learning models like Convolutional Neural Networks (CNNs). CNNs are designed to process data with a grid-like topology, making them ideal for image analysis and generation. They work by detecting patterns such as edges, textures, and shapes, which are essential for understanding and recreating images.

Key AI Models in AI Image Generation

Generative Adversarial Networks (GANs)

Introduced by Ian Goodfellow in 2014, GANs consist of two neural networks: a generator and a discriminator. The generator creates images, while the discriminator evaluates them against real images. Through this adversarial process, the generator improves its output to produce increasingly realistic images.

StyleGAN

Developed by NVIDIA, StyleGAN is a GAN variant known for generating high-quality human faces. It introduces a style-based generator architecture, allowing control over different levels of detail in the image. StyleGAN2 and StyleGAN3 further improved image quality and addressed issues like texture sticking.

Diffusion Models

Diffusion models generate images by starting with random noise and gradually refining it to match the desired output. They have gained popularity due to their ability to produce high-quality images and their flexibility in various applications.

Stable Diffusion

Stable Diffusion is an open-source diffusion model that enables text-to-image generation. It can also perform inpainting and outpainting, allowing for image editing and extension. Its open-source nature has made it widely accessible for developers and artists.

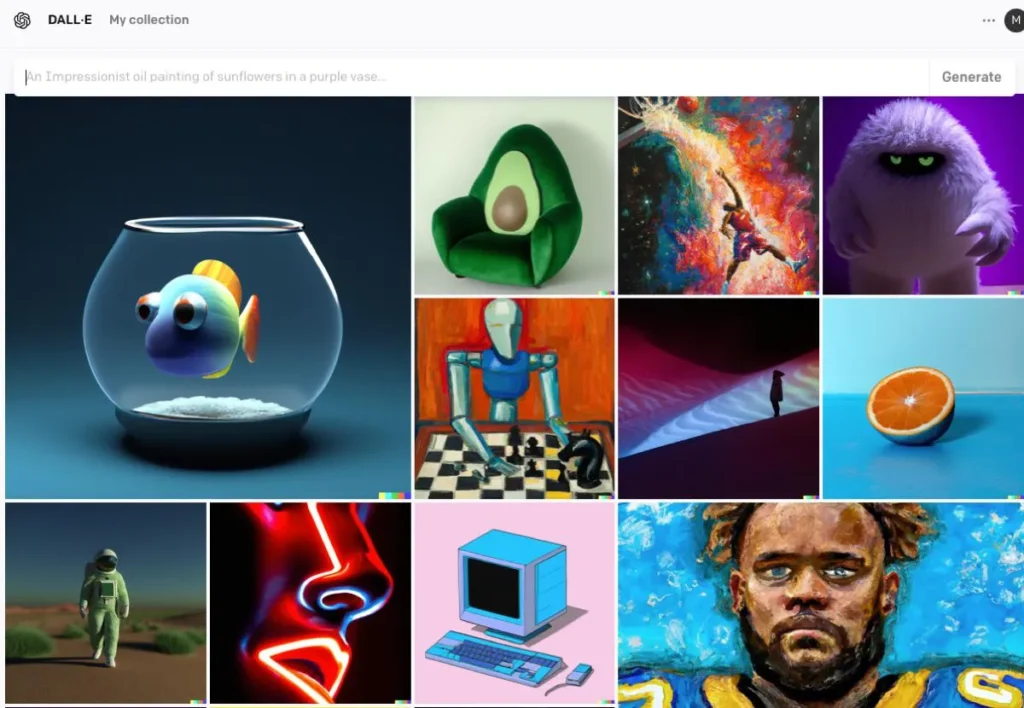

DALL·E

Developed by OpenAI, DALL·E is a transformer-based model capable of generating images from textual descriptions. DALL·E 2 and DALL·E 3 have improved upon the original, offering higher resolution and more accurate image-text alignment. DALL·E 3 is integrated into ChatGPT for enhanced user interaction.

The Process of AI Image Generation

Training the Model

AI models require extensive training on large datasets of images. During training, the model learns to recognize patterns and features within the images, enabling it to generate new images that mimic the training data.

Generating New Images

Once trained, the model can generate new images by:

- Receiving Input: This could be random noise (in GANs), a text prompt (in DALL·E), or an existing image (for editing).This step captures the semantic meaning of the text, enabling the AI to understand the content and context.

- Processing Input: The model processes the input through its neural network layers, applying learned patterns and features.Using the encoded text, the AI employs models such as Generative Adversarial Networks (GANs) or diffusion models to create images. These models generate images by starting with random noise and refining it to match the textual description.

- Refinement and Evaluation: The generated image is then refined using attention mechanisms to ensure coherence with the text. A discriminator model evaluates the image’s realism and consistency with the input, providing feedback for further refinement.

- Outputting Image: The final output is a new image that reflects the characteristics of the training data and the specific input provided.

Code Example of AI Image Generation

here are practical Python code examples demonstrating how to generate images using three prominent AI models: Generative Adversarial Networks (GANs), Stable Diffusion, and DALL·E.

Generative Adversarial Networks (GANs) with PyTorch

Generative Adversarial Networks (GANs) consist of two neural networks—the Generator and the Discriminator—that compete with each other to generate new, realistic data instances. Here’s a simplified example using PyTorch to generate images:

pythonimport torch

import torch.nn as nn

# Define the Generator network

class Generator(nn.Module):

def __init__(self):

super(Generator, self).__init__()

self.fc1 = nn.Linear(100, 128)

self.fc2 = nn.Linear(128, 784) # Assuming output image size is 28x28

def forward(self, x):

x = torch.relu(self.fc1(x))

x = torch.tanh(self.fc2(x))

return x

# Instantiate the generator

generator = Generator()

# Generate a random noise vector

noise = torch.randn(1, 100)

# Generate an image

generated_image = generator(noise)

This code defines a simple generator network that takes a 100-dimensional noise vector as input and produces a 784-dimensional output, which can be reshaped into a 28×28 image. The tanh activation function ensures that the output values are in the range [-1, 1], which is common for image data.

Stable Diffusion with Hugging Face Diffusers

Stable Diffusion is a powerful text-to-image model that generates images based on textual descriptions. The Hugging Face diffusers library provides an easy interface to use this model:

pythonfrom diffusers import StableDiffusionPipeline

import torch

# Load the pre-trained Stable Diffusion model

pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4")

pipe = pipe.to("cuda") # Move the model to GPU for faster inference

# Generate an image from a text prompt

prompt = "A serene landscape with mountains and a river"

image = pipe(prompt).images[0]

# Save the generated image

image.save("generated_image.png")

This script loads the Stable Diffusion model and generates an image based on the provided prompt. Ensure that you have the necessary dependencies installed and a compatible GPU for optimal performance.

DALL·E with OpenAI API

DALL·E is another text-to-image model developed by OpenAI. You can interact with it using OpenAI’s API:

pythonimport openai

import requests

from PIL import Image

from io import BytesIO

# Set your OpenAI API key

openai.api_key = "your-api-key"

# Generate an image using DALL·E

response = openai.Image.create(

prompt="A futuristic cityscape at sunset",

n=1,

size="512x512"

)

# Get the URL of the generated image

image_url = response['data'][0]['url']

# Download and display the image

image_response = requests.get(image_url)

image = Image.open(BytesIO(image_response.content))

image.save("dalle_generated_image.png")

Replace "your-api-key" with your actual OpenAI API key. This script sends a prompt to the DALL·E model and retrieves the generated image. The image is then saved locally.

CometAPI also integrates DALL-E 3 API, you can also use CometAPI’s key to access DALL-E 3 API, which is more convenient and faster than OpenAI.

For more Model information in Comet API please see API doc.

These examples provide a starting point for generating images using different AI models. Each model has its unique capabilities and requirements, so choose the one that best fits your project’s needs.

Conclusion

AI image generation stands at the intersection of technology and creativity, offering unprecedented possibilities in visual content creation. Understanding how AI generates images, the models involved, and the implications of this technology is essential as we navigate its integration into various aspects of society.

Access AI Image API in CometAPI

CometAPI provides access to over 500 AI models, including open-source and specialized multimodal models for chat, images, code, and more. Its primary strength lies in simplifying the traditionally complex process of AI integration. With it, access to leading AI tools like Claude, OpenAI, Deepseek, and Gemini is available through a single, unified subscription.You can use the API in CometAPI to create music and artwork, generate videos, and build your own workflows

CometAPI offer a price far lower than the official price to help you integrate GPT-4o API ,Midjourney API Stable Diffusion API (Stable Diffusion XL 1.0 API) and Flux API(FLUX.1 [dev] API etc) , and you will get $1 in your account after registering and logging in!

CometAPI integrates the latest GPT-4o-image API .