How Does Qwen2.5-Max Work? How to access it?

Qwen2.5-Max represents a significant advancement in the realm of large-scale language models, showcasing remarkable capabilities in natural language understanding and generation. Developed by the Qwen team, this model leverages cutting-edge techniques to deliver superior performance across various benchmarks.

What is Qwen2.5-Max?

Qwen2.5-Max is a state-of-the-art large-scale language model designed to push the boundaries of natural language understanding and generation. Developed with a Mixture-of-Experts (MoE) architecture, it efficiently scales computation while providing superior performance across various AI benchmarks. The model has been pre-trained on an extensive dataset of over 20 trillion tokens, ensuring a broad and deep understanding of multiple languages, topics, and technical disciplines.

Key Features of Qwen2.5-Max

- Mixture-of-Experts (MoE) Architecture: Optimizes computation by selecting specific experts per query, enhancing efficiency.

- Extensive Pre-training: Trained on a massive dataset for superior comprehension and knowledge representation.

- Enhanced Reasoning and Comprehension: Outperforms other models in mathematical problem-solving, logical reasoning, and coding tasks.

- Fine-Tuned with Supervised and Reinforcement Learning: Incorporates Reinforcement Learning from Human Feedback (RLHF) to refine responses and improve usability.

How Does Qwen2.5-Max Work?

1. Mixture-of-Experts (MoE) Architecture

Qwen2.5-Max employs a Mixture-of-Experts system, which dynamically selects a subset of expert neural networks for each query. This approach ensures high efficiency and scalability, as only relevant experts are activated for specific tasks, reducing computational overhead while maintaining accuracy.

2. Extensive Pre-training

The model has been trained on a diverse dataset that spans multiple domains, including literature, coding, science, and conversational language. This extensive training allows Qwen2.5-Max to understand and generate text with remarkable fluency and contextual accuracy.

3. Supervised and Reinforcement Learning Optimization

After pre-training, Qwen2.5-Max undergoes fine-tuning using human-labeled data and Reinforcement Learning from Human Feedback (RLHF). This improves its alignment with human expectations, making it more reliable for various applications.

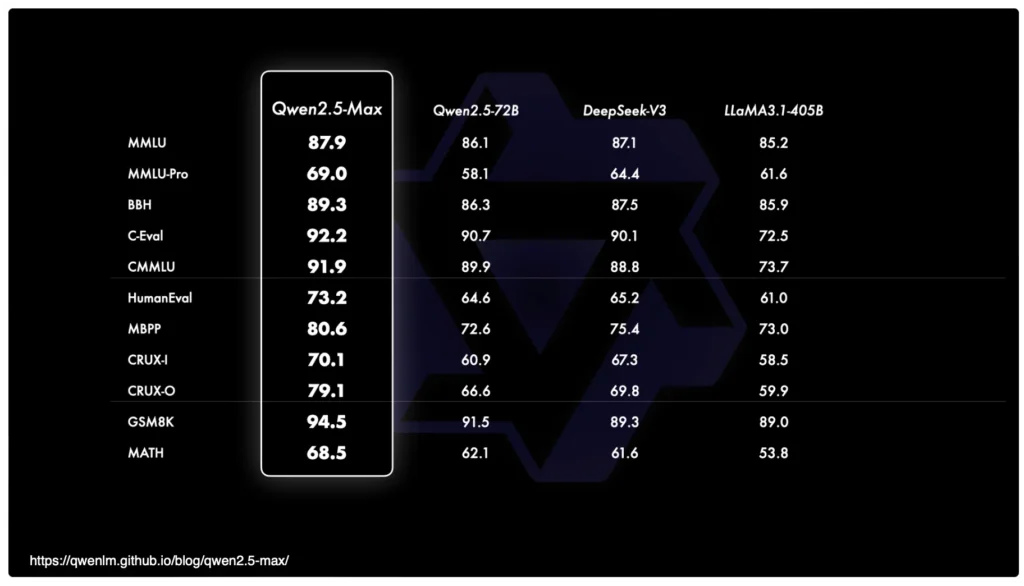

4. Benchmark Performance

Qwen2.5-Max has outperformed leading AI models in several industry-standard benchmarks:

- Arena-Hard, LiveBench, LiveCodeBench, and GPQA-Diamond: Consistently ranks at the top in these AI evaluation platforms.

- Mathematics and Programming: Holds the first position for computational reasoning and coding challenges.

- Complex Task Handling: Achieves second place in tackling nuanced and multi-step problems.

How to Use Qwen2.5-Max

1. Accessing Qwen2.5-Max

Qwen2.5-Max is available via multiple access points. Below are the detailed steps for accessing it:

Access via Official Website

The quickest way to experience Qwen2.5-Max is through the Qwen Chat platform. This is a web-based interface that allows you to interact with the model directly in your browser—just like you’d use ChatGPT in your browser.

- Visit the official Qwen2.5-Max website.

- Sign up or log in using your credentials.

- Navigate to the “Get Started” section.

- Choose between free access (if available) or subscription-based plans.

- Follow the onboarding guide to start using the model.

Using Qwen2.5-Max API

- Go to the official Qwen API documentation page.

- Sign up for an API key by registering an account.

- Once verified, log in and retrieve your API credentials.

- Use the API by sending requests to the designated endpoints with proper authentication.

- Integrate the API into your applications using Python, JavaScript, or any preferred programming language.

Access via Cloud Platforms

- Visit a cloud provider hosting Qwen 2.5-Max, such as Alibaba Cloud or Hugging Face.

- Create an account if you do not already have one.

- Search for “Qwen2.5-Max” in the AI/ML section.

- Select the model and configure your access settings (compute power, storage, etc.).

- Deploy the model and start using it via an interactive environment or API calls.

Deploying Qwen2.5-Max Locally

- Check for an open-source or downloadable version on GitHub or the official website.

- Ensure your system meets the hardware requirements (GPUs, memory, storage).

- Follow installation instructions provided in the documentation.

- Install necessary dependencies and configure the environment.

- Run the model locally and test its capabilities.

Using CometAPI (Third-Party AI Platforms)

CometAPI offer a price far lower than the official price to help you integrate qwen 2.5 max API ,and you will get $1 in your account after registering and logging in!

CometAPI provides access to over 500 AI models, including open-source and specialized multimodal models for chat, images, code, and more. Its primary strength lies in simplifying the traditionally complex process of AI integration.Welcome to register and experience CometAPI.

- Log in to cometapi.com. If you are not our user yet, please register first

- Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

- Access Qwen2.5-Max (model name: qwen-max)via their web interface, API, or chatbot services.

- Use built-in tools to generate text, code, or analyze data.

2. Practical Applications

- Conversational AI: Used for chatbots and virtual assistants.

- Code Assistance: Helps developers generate and debug code efficiently.

- Educational Support: Enhances tutoring systems with high-level reasoning capabilities.

- Content Creation: Generates articles, summaries, and marketing copy with high accuracy.

2. Practical Applications

- Conversational AI: Used for chatbots and virtual assistants.

- Code Assistance: Helps developers generate and debug code efficiently.

- Educational Support: Enhances tutoring systems with high-level reasoning capabilities.

- Content Creation: Generates articles, summaries, and marketing copy with high accuracy.

Comparison with Other AI Models

Qwen2.5-Max vs. GPT-4

| Feature | Qwen2.5-Max | GPT-4 |

|---|---|---|

| Architecture | Mixture-of-Experts | Dense Transformer |

| Pre-training Data | 20+ Trillion Tokens | ~13 Trillion Tokens |

| Efficiency | High (Activates only required experts) | Lower (Processes entire model) |

| Code Understanding | Strong (Top in coding benchmarks) | Strong (Performs well but lower than Qwen2.5-Max) |

| Mathematical Reasoning | Advanced | Moderate |

Qwen2.5-Max vs. DeepSeek-V3

| Feature | Qwen2.5-Max | DeepSeek-V3 |

|---|---|---|

| Knowledge Coverage | Broad (Multiple domains) | Focused (Technical and science-based) |

| Logical Reasoning | High | Moderate |

| API Availability | Yes | Limited |

| Model Adaptability | Fine-tuned for multiple applications | Specialized in fewer domains |

Other Models

Future Prospects

Qwen2.5-Max is expected to evolve further with the integration of multi-modal capabilities, allowing it to process images, videos, and structured data. Future iterations may include better real-time learning and improved interaction handling, making it an even more powerful tool for AI-driven solutions.

Conclusion

Qwen 2.5-Max represents a major advancement in artificial intelligence, surpassing existing models in reasoning, comprehension, and task execution. With its innovative Mixture-of-Experts architecture, extensive training, and superior benchmark performance, it is poised to revolutionize applications across industries, from software development to customer support and education. As AI continues to evolve, Qwen 2.5-Max is well-positioned to lead the charge in delivering cutting-edge AI-powered solutions.