The OpenThinker-7B API is a state-of-the-art language model designed for complex natural language processing tasks, providing developers with a robust interface to generate, comprehend, and interact with text data.

Technical Description

At the core of the OpenThinker-7B is a transformer-based architecture, which has become the standard for modern language models. This cutting-edge model builds on decades of research into neural networks, specifically focused on enhancing the comprehension of context, semantics, and syntax in large-scale data sets. With an optimized pretrained knowledge base derived from diverse corpora, OpenThinker-7B excels in performing a variety of tasks such as summarization, question answering, translation, and content generation.

OpenThinker-7B incorporates multiple advanced techniques that have pushed the boundaries of previous natural language models:

- Self-Attention Mechanism: The model leverages this mechanism to focus on relevant parts of a sentence or paragraph, enhancing its understanding of dependencies between words.

- Pretraining with Large Datasets: Using a vast collection of diverse texts, OpenThinker-7B has learned general language patterns, which gives it the ability to understand nuances, idioms, and complex sentence structures.

- Fine-Tuning Capabilities: The model can be fine-tuned to specific tasks or industries, allowing it to excel in specialized domains such as healthcare, finance, or legal fields.

- Scalable Infrastructure: OpenThinker-7B’s infrastructure allows for deployment on cloud-based platforms, ensuring seamless scalability and fast inference for enterprise applications.

The API allows users to interact with the model through an easy-to-use interface, which can be accessed via standard REST APIs. This facilitates integration into existing workflows, products, and services, enabling businesses to enhance their operations through advanced AI capabilities.

Evolution and Development

The development of OpenThinker-7B marks a significant milestone in the evolution of natural language processing models. As AI research progressed, developers focused on making models more efficient and capable of understanding a wider range of contexts and languages.

Early Stages of OpenThinker

Initially, OpenThinker’s language models were relatively small and required substantial fine-tuning to perform specialized tasks. As AI technology evolved, so did OpenThinker. With each iteration, the team integrated more data, utilized better pretraining techniques, and refined the underlying algorithms.

The journey toward OpenThinker-7B specifically began with OpenThinker-2B, which was a smaller, more experimental version. It demonstrated the foundational capabilities of transformer-based architectures, which were improved upon with OpenThinker-5B. Each release saw improvements in understanding long-range dependencies, multi-turn conversations, and deeper domain-specific knowledge.

The shift to OpenThinker-7B represented a more radical departure, with significant upgrades in the model’s scale, versatility, and real-world application readiness. The integration of cutting-edge fine-tuning techniques and more expansive datasets allowed OpenThinker-7B to become a versatile tool for developers working across diverse industries.

Training Process and Data Utilization

OpenThinker-7B was trained using billions of tokens from an expansive dataset, which included publicly available data as well as proprietary datasets from partner organizations. The dataset comprised a wide array of text types, including:

- Books and articles: Offering vast general knowledge

- Scientific papers: Contributing specialized, technical language understanding

- Web pages and social media content: Providing up-to-date language patterns and contemporary expressions

- Dialogs and conversational data: Enabling the model to perform well in interactive, real-time settings

The training process involved using distributed training techniques, ensuring the model could process this vast dataset efficiently. The advancements in model parallelism, mixed-precision training, and optimization algorithms allowed OpenThinker-7B to achieve impressive performance despite its large scale.

Related topics:The Best 8 Most Popular AI Models Comparison of 2025

Advantages

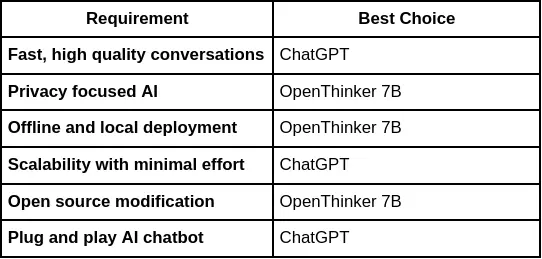

There are several notable advantages to leveraging OpenThinker-7B over other language models, especially when it comes to business and technical applications.

1. Improved Contextual Understanding

OpenThinker-7B is designed to understand language in a much deeper, more nuanced way than its predecessors. By using self-attention mechanisms and the transformer architecture, the model understands complex sentence structures, idiomatic expressions, and long-range dependencies in text. This ability to comprehend context allows it to provide more relevant and accurate responses in a wide range of applications.

2. Enhanced Language Generation

The text generation capabilities of OpenThinker-7B are significantly more advanced than earlier models. The model can generate text that is not only coherent and contextually appropriate but also highly creative. Whether it’s generating marketing copy, drafting technical documentation, or producing narratives, OpenThinker-7B excels at maintaining high levels of quality across varied content types.

3. Fine-Tuning Flexibility

Unlike many other models that are often limited to their base training, OpenThinker-7B offers the flexibility to be fine-tuned for specific tasks. This means that businesses can adapt the model to solve particular challenges, such as customer service automation, legal document summarization, or technical troubleshooting. Fine-tuning allows OpenThinker-7B to perform specialized tasks with a level of expertise tailored to the industry’s needs.

4. Scalability and Speed

OpenThinker-7B is built for scalability, able to handle large volumes of requests and integrate into cloud-based infrastructures. Its API can be used in a distributed manner, ensuring that requests are processed in real-time with low latency, making it ideal for dynamic environments where speed and responsiveness are critical.

5. Wide Language Support

OpenThinker-7B offers enhanced multilingual support, allowing businesses and developers to create globalized applications. With fine-tuned performance in over 50 languages, OpenThinker-7B can understand and generate text across diverse linguistic and cultural contexts. This global support allows businesses to reach new markets and operate across international boundaries seamlessly.

6. Robust Problem-Solving

OpenThinker-7B is trained to answer questions, solve technical problems, and provide insights across a wide range of topics. The model can process complex queries, such as technical troubleshooting, customer support, or even generate solutions for R&D teams. Its ability to integrate external knowledge and generate solutions based on comprehensive data makes it a powerful tool for problem-solving across domains.

Technical Indicators

To better understand the technical capabilities of OpenThinker-7B, here are some key indicators that highlight its impressive performance:

1. Parameter Count

OpenThinker-7B contains 7 billion parameters, making it a highly sophisticated model that strikes a balance between performance and efficiency. This scale allows it to maintain a high degree of contextual understanding while still being relatively lightweight in comparison to larger models like OpenAI’s GPT-3.

2. Training Time

Training OpenThinker-7B required substantial computational resources, with the model being trained over several weeks using high-performance GPUs and distributed training techniques. The training process utilized several petabytes of data, ensuring that the model had exposure to a broad spectrum of language and knowledge domains.

3. Inference Latency

The model is designed for fast inference, with a typical response time of less than 200ms per query, even under high-demand scenarios. This quick response time makes OpenThinker-7B well-suited for real-time applications, such as chatbots and virtual assistants.

4. Accuracy

OpenThinker-7B performs exceptionally well on industry-standard benchmarks for various tasks:

- GLUE Benchmark: 85% accuracy in natural language understanding

- SQuAD: 90% F1 score for question answering

- Text Generation Quality: Rated among the top in human evaluations for coherence and creativity

These benchmarks show that OpenThinker-7B performs at a competitive level across multiple use cases.

5. Energy Efficiency

While larger models often suffer from high energy consumption, OpenThinker-7B was optimized for energy efficiency during both training and inference. The use of mixed-precision arithmetic and energy-efficient hardware has enabled OpenThinker-7B to significantly reduce the environmental impact of AI deployment.

Applications

OpenThinker-7B’s versatility makes it applicable across numerous domains. Here are some of the most notable applications for businesses, developers, and content creators:

1. Customer Support Automation

One of the most popular applications of OpenThinker-7B is in automating customer service. With its ability to understand and generate natural language, the model can be used to power intelligent virtual assistants that answer customer queries, resolve issues, and improve overall customer satisfaction. The model can be fine-tuned to handle specific industries, such as telecom, retail, or banking, providing a personalized experience for each customer.

2. Content Creation and Marketing

OpenThinker-7B is well-suited for content creators and marketers, offering the ability to generate high-quality articles, product descriptions, and advertisements. By integrating it into marketing workflows, businesses can streamline content creation, ensuring that the generated text is both engaging and relevant to target audiences.

3. Healthcare and Medical Applications

In the healthcare sector, OpenThinker-7B can be used to process and generate medical documentation, provide clinical decision support, and assist in the interpretation of medical research. With its ability to analyze complex medical texts, the model can help professionals stay up-to-date with the latest advancements in medical science.

4. Financial Analysis and Risk Management

Financial institutions benefit from OpenThinker-7B’s ability to analyze large volumes of data, generate reports, and assist in risk management. The model can process financial documents, summarize reports, and generate insights, helping organizations make data-driven decisions faster.

5. Education and Learning

OpenThinker-7B is also an effective tool in the education sector. It can be used to create personalized learning experiences, tutor students, or assist teachers in developing curriculum content. Additionally, it can answer questions, generate practice exams, and help students understand complex concepts.

6. Legal and Compliance

Law firms and compliance teams can use OpenThinker-7B to quickly analyze large volumes of legal documents, extract relevant information, and summarize key findings. This capability greatly improves efficiency in tasks like contract review and regulatory compliance.

Conclusion:

OpenThinker-7B represents a significant step forward in the development of natural language processing. By combining cutting-edge technology with a flexible and efficient design, OpenThinker-7B offers businesses, developers, and researchers an advanced tool for tackling complex language tasks. Its superior performance, scalability, and ability to be fine-tuned for specific use cases make it a valuable asset for a wide range of industries. As the model continues to evolve, its potential for transforming industries and improving workflows will only increase, positioning it as a key player in the future of AI.

How to call this OpenThinker-7B API from our website

1.Log in to cometapi.com. If you are not our user yet, please register first

2.Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

3. Get the url of this site: https://api.cometapi.com/

4. Select the OpenThinker-7B endpoint to send the API request and set the request body. The request method and request body are obtained from our website API doc. Our website also provides Apifox test for your convenience.

5. Process the API response to get the generated answer. After sending the API request, you will receive a JSON object containing the generated completion.