Qwen 2.5 Coder 32B Instruct API is a powerful language model interface designed to facilitate natural language processing and code generation tasks by taking human-provided instructions and executing them effectively.

The Evolution of Qwen’s Coding Capabilities

From General Models to Specialized Coding Assistants

The development journey of Qwen 2.5 Coder 32B Instruct reveals a deliberate progression toward greater specialization in AI-assisted programming. The initial Qwen foundation models established core capabilities in language understanding and generation with particular strength in multilingual processing. With the arrival of the Qwen 2 series, significant architectural improvements enhanced the models’ reasoning abilities and context handling, setting the stage for domain-specific variants. The Qwen 2.5 generation marked a crucial evolutionary step by introducing specialized models optimized for particular tasks, with the Coder variant representing the culmination of research specifically targeting software development assistance. This evolutionary path demonstrates how general-purpose language models can be refined through targeted training to create tools that excel in specific domains while retaining broad capabilities.

Architectural Advancements in Qwen Coding Models

The technical progression of Qwen’s coding models reflects broader advancements in AI architectures optimized for understanding and generating code. Early versions utilized standard transformer designs with minimal code-specific optimizations, limiting their ability to reason about complex programming concepts. The architecture refinements in Qwen 2.5 Coder include specialized attention mechanisms that better capture the hierarchical nature of code, enhanced tokenization systems that efficiently represent programming constructs, and improved positional encoding that helps maintain awareness of scope and structure. These technical improvements enable the model to process longer code segments with greater coherence, understand relationships between different components, and maintain consistency across complex implementations. The current architecture represents a carefully balanced design that prioritizes code understanding while maintaining the flexibility required for diverse programming tasks.

Technical Specifications of Qwen 2.5 Coder 32B Instruct

Model Architecture and Parameters

The core architecture of Qwen 2.5 Coder 32B Instruct is built upon an advanced transformer framework optimized specifically for code understanding and generation. With 32 billion parameters distributed across multiple self-attention layers, the model demonstrates sophisticated pattern recognition particularly suited to the structured nature of programming languages. The architecture incorporates specialized attention mechanisms that help the model maintain awareness of code syntax, language-specific conventions, and functional relationships between different code blocks. Enhanced positional encoding enables better tracking of hierarchical structure in code, including nested functions, classes, and control structures. The model supports an extended context window of 32,768 tokens, allowing it to process entire source files or complex multi-file projects while maintaining coherence and consistency throughout generated implementations.

Training Data and Knowledge Base

The training methodology for Qwen 2.5 Coder involved exposure to an extensive and diverse corpus of programming materials carefully selected to develop comprehensive coding expertise. The model was trained on billions of lines of code spanning over 40 programming languages, with particular emphasis on popular languages like Python, JavaScript, Java, C++, and Rust. Beyond raw code, the training data included programming documentation, technical blogs, educational resources, and developer discussions from platforms like Stack Overflow and GitHub. This approach ensured the model developed understanding not just of syntax but also of programming concepts, design patterns, and best practices across different domains. Special attention was given to including high-quality code examples with clear documentation, helping the model learn to generate not just functional code but also well-structured and maintainable implementations.

Performance Benchmarks and Metrics

The technical evaluation of Qwen 2.5 Coder 32B Instruct across standard benchmarks demonstrates its exceptional capabilities in programming tasks. On the HumanEval benchmark, which assesses functional correctness of generated Python solutions, the model achieves a pass@1 score exceeding 75%, placing it among the top-performing code generation systems. For the MBPP (Mostly Basic Programming Problems) benchmark, Qwen 2.5 Coder demonstrates approximately 70% accuracy across diverse programming challenges. The model shows particularly strong cross-language capabilities, maintaining consistent performance across Python, JavaScript, Java, C++, and other popular languages. Additional metrics highlight the model’s strength in code explanation tasks, where it achieves high accuracy in describing functionality and identifying potential issues in existing code. These benchmark results validate the effectiveness of Alibaba Cloud’s specialized training approach in developing a model with deep programming expertise.

Advanced Capabilities of Qwen 2.5 Coder 32B Instruct

Multilingual Programming Support

The language versatility of Qwen 2.5 Coder extends across dozens of programming languages, frameworks, and development environments. Beyond proficiency in mainstream languages like Python, JavaScript, and Java, the model demonstrates strong capabilities in emerging languages like Rust, Go, and Kotlin. This multilingual support includes understanding of language-specific idioms, conventions, and best practices, enabling the model to generate code that feels native to each language rather than merely translated. The model’s knowledge encompasses popular frameworks and libraries within each ecosystem, including React, TensorFlow, Django, Spring Boot, and hundreds of others. For web development, the model handles the full stack from HTML/CSS to backend server implementations, database interactions, and deployment configurations. This comprehensive programming language coverage makes Qwen 2.5 Coder valuable across diverse development teams and projects regardless of their technology stack.

Code Understanding and Transformation

Beyond simple code generation, Qwen 2.5 Coder excels at code comprehension tasks that require deep understanding of existing implementations. The model can analyze complex codebases to identify patterns, dependencies, and potential issues, providing insights that help developers navigate unfamiliar code more efficiently. Its refactoring capabilities allow it to suggest structural improvements, identify redundancies, and modernize legacy implementations while preserving functionality. For code maintenance tasks, the model offers migration assistance by translating code between languages or updating implementations to work with newer library versions. The model also demonstrates strong abilities in optimization scenarios, suggesting performance improvements by identifying inefficient algorithms, redundant operations, or opportunities for parallelization. These comprehension-based capabilities make Qwen 2.5 Coder valuable not just for creating new code but also for maintaining and improving existing software systems.

Contextual Problem Solving

A particularly valuable aspect of Qwen 2.5 Coder is its contextual awareness when approaching programming problems. Rather than treating each request in isolation, the model maintains understanding of the broader development context, including project requirements, architectural constraints, and previous interactions. This enables more coherent solutions that align with established patterns and integrate smoothly with existing codebases. The model demonstrates sophisticated requirement interpretation, correctly inferring implied constraints or necessary functionality even when specifications are incomplete. For complex implementations, it exhibits step-by-step reasoning, breaking down problems into logical components and addressing each systematically. When faced with ambiguity, the model can identify multiple potential interpretations and either request clarification or present alternative implementations with explanations. This contextual problem-solving approach distinguishes Qwen 2.5 Coder from simpler code generation systems and makes it more effective in real-world development scenarios.

Practical Applications of Qwen 2.5 Coder 32B Instruct

Software Development Acceleration

In professional development environments, Qwen 2.5 Coder serves as a powerful tool for accelerating coding workflows across various stages of the software lifecycle. During initial development phases, it assists with rapid prototyping by generating functional implementations from high-level specifications, helping teams quickly evaluate different approaches before committing to detailed implementations. For feature development, the model helps programmers implement complex algorithms, optimize database queries, or integrate with external services through well-structured code that follows project conventions. During debugging sessions, it can analyze error messages, suggest potential fixes, and explain underlying issues, significantly reducing time spent troubleshooting. For testing requirements, the model generates comprehensive test cases covering edge conditions and potential failure modes, improving code reliability. These capabilities combine to create a powerful assistant that can potentially reduce development time by 20-40% for many common programming tasks.

Educational and Learning Applications

The educational value of Qwen 2.5 Coder extends across various learning contexts, from beginners taking their first programming steps to experienced developers exploring new technologies. For novice programmers, the model provides clear explanations of fundamental concepts, generates instructive examples, and helps troubleshoot common mistakes with explanations that promote understanding rather than just fixing errors. In academic settings, it serves as a supplementary instructor that can generate custom exercises, provide personalized feedback, and explain complex algorithms or data structures through clear examples and step-by-step breakdowns. For professional developers learning new languages or frameworks, Qwen 2.5 Coder accelerates the learning curve by translating familiar patterns to new environments, explaining idiomatic usage, and highlighting important differences from previously known technologies. This educational dimension makes the model valuable not just for production code but also for building programming knowledge and skills.

Enterprise Integration and Automation

Within business environments, Qwen 2.5 Coder offers significant value through integration with development platforms and automation workflows. The model can be incorporated into continuous integration systems to perform automated code reviews, identifying potential bugs, security vulnerabilities, or deviations from best practices before they reach production. In enterprise development platforms, it provides consistent coding assistance across different teams, helping maintain standardized approaches and knowledge sharing. For legacy system maintenance, the model assists with documenting undocumented code, migrating to modern platforms, and extending existing functionality while maintaining compatibility. In DevOps contexts, it helps generate configuration files, deployment scripts, and infrastructure-as-code implementations tailored to specific cloud environments. These enterprise applications demonstrate how Qwen 2.5 Coder can be leveraged beyond individual productivity to enhance organizational development practices and knowledge management.

Comparative Advantages of Qwen 2.5 Coder 32B Instruct

Advantages Over General-Purpose Language Models

When compared to general AI models of similar scale, Qwen 2.5 Coder demonstrates several distinct advantages for programming tasks. The model’s specialized training on programming materials results in significantly higher accuracy for code generation, with fewer syntactic errors and better adherence to language-specific conventions. Its domain-specific architecture optimizations enable more efficient processing of code structures, allowing it to maintain coherence across longer implementations where general models often lose consistency. The Coder variant shows superior understanding of programming concepts such as algorithms, data structures, and design patterns, enabling it to implement more sophisticated solutions to complex problems. Importantly, despite this specialization, Qwen 2.5 Coder maintains strong general language capabilities, allowing it to understand natural language requirements and explain technical concepts clearly—a balance not always achieved by highly specialized models. These advantages make it particularly valuable for real-world development scenarios that require both technical accuracy and clear communication.

Strengths Compared to Other Coding Models

Among specialized coding assistants, Qwen 2.5 Coder 32B Instruct demonstrates several competitive advantages. Its multilingual capabilities exceed many competitors, with consistent performance across a wider range of programming languages rather than excelling primarily in one or two languages. The model shows particularly strong reasoning abilities about code functionality, enabling it to explain complex implementations, identify potential issues, and suggest architectural improvements more effectively than many alternatives. Its instruction following capabilities are notably refined, with better ability to adhere to specific requirements or constraints when generating solutions. For enterprise applications, the model’s knowledge of development practices and software engineering principles helps it generate not just functional code but implementations that follow established patterns for maintainability and scalability. These comparative strengths position Qwen 2.5 Coder as a particularly capable option for organizations seeking comprehensive coding assistance across diverse projects and technologies.

Implementation and Integration Considerations

Deployment Options and Requirements

The practical deployment of Qwen 2.5 Coder 32B Instruct requires consideration of several technical factors to achieve optimal performance. As a 32-billion parameter model, it requires substantial computational resources, typically high-end GPUs or specialized cloud instances for full-scale deployment. However, Alibaba Cloud offers several optimized configurations that reduce resource requirements while maintaining core capabilities. These include quantized versions that reduce memory footprint by 50-70% with minimal performance impact and distilled variants that provide similar functionality with fewer parameters for deployment on more modest hardware. For enterprise environments, the model supports containerized deployment through Docker and Kubernetes, enabling straightforward integration with existing infrastructure. The model can be accessed through both RESTful APIs for network-based integration and direct library implementations for tighter coupling with development environments. These flexible deployment options make the technology accessible across different organizational contexts, from individual developers to large enterprise teams.

Integration with Development Workflows

To maximize value from Qwen 2.5 Coder 32 B, organizations should consider strategic workflow integration approaches that embed its capabilities directly into development processes. The model can be integrated with popular integrated development environments (IDEs) through plugins or extensions that provide contextual assistance directly where developers are working. For team environments, integration with code repository systems like GitHub or GitLab enables capabilities such as automated code review, suggestion generation, or documentation assistance integrated with pull request workflows. In CI/CD pipelines, the model can provide automated quality checks, test generation, or optimization suggestions as part of the build and deployment process. For knowledge management, integration with internal documentation systems helps maintain up-to-date technical documentation that aligns with actual implementations. These integration approaches help move beyond treating the model as a standalone tool to embedding its capabilities throughout the development lifecycle, maximizing productivity benefits while maintaining appropriate human oversight.

Limitations and Future Directions

Current Limitations to Consider

Despite its impressive capabilities, Qwen 2.5 Coder 32B Instruct has several inherent limitations that users should consider. Like all current AI models, it occasionally generates code with logical errors or misunderstandings of requirements, particularly for highly complex or novel programming challenges. The model’s knowledge is limited to its training data, potentially resulting in outdated recommendations for rapidly evolving frameworks or languages released or significantly updated after its training. While Qwen 2.5 Coder excels at generating specific implementations, it may struggle with large-scale architectural decisions requiring deep domain expertise or business context beyond programming knowledge. Users should also be aware that the model might occasionally hallucinate APIs or library functions that don’t exist, especially for less common frameworks. These limitations highlight the importance of human oversight and validation when applying the model’s outputs to production environments or critical systems.

Future Development Roadmap

The ongoing evolution of Qwen Coder models suggests several promising directions for continued enhancement and specialization. Alibaba Cloud researchers have indicated plans to develop more domain-specialized variants focusing on particular sectors such as financial systems, healthcare applications, or embedded programming. Future iterations may incorporate improved multimodal capabilities, enabling the model to understand and generate code based on diagrams, wireframes, or other visual inputs. The development team is exploring retrieval-augmented generation approaches that would allow future models to directly reference up-to-date documentation or code repositories during generation, addressing current limitations related to recent technology changes. Additional research focuses on enhancing test generation capabilities and developing more sophisticated approaches to code quality assessment beyond functional correctness. These development directions reflect a commitment to continually improving the model’s capabilities while addressing current limitations.

Related topics:The Best 8 Most Popular AI Models Comparison of 2025

Conclusion:

Qwen 2.5 Coder 32B Instruct represents a significant advancement in AI-assisted programming, demonstrating how specialized models can transform software development practices through intelligent automation and enhanced productivity. The model offers particular value for professional development teams seeking to accelerate coding workflows, educational contexts where clear explanation of programming concepts is valuable, and enterprise environments requiring consistent coding assistance across diverse technology stacks. As AI continues to evolve, tools like Qwen 2.5 Coder illustrate the potential for AI-augmented development to enhance human capabilities rather than replace them—enabling developers to focus on higher-level design and innovation while automating more routine aspects of implementation. For organizations looking to leverage AI to improve development efficiency, code quality, and knowledge sharing, Qwen 2.5 Coder 32B Instruct provides a sophisticated solution that balances specialized programming expertise with practical deployment considerations. The continued advancement of such models seems likely to further transform software development practices in coming years, making programming more accessible, efficient, and effective across industries.

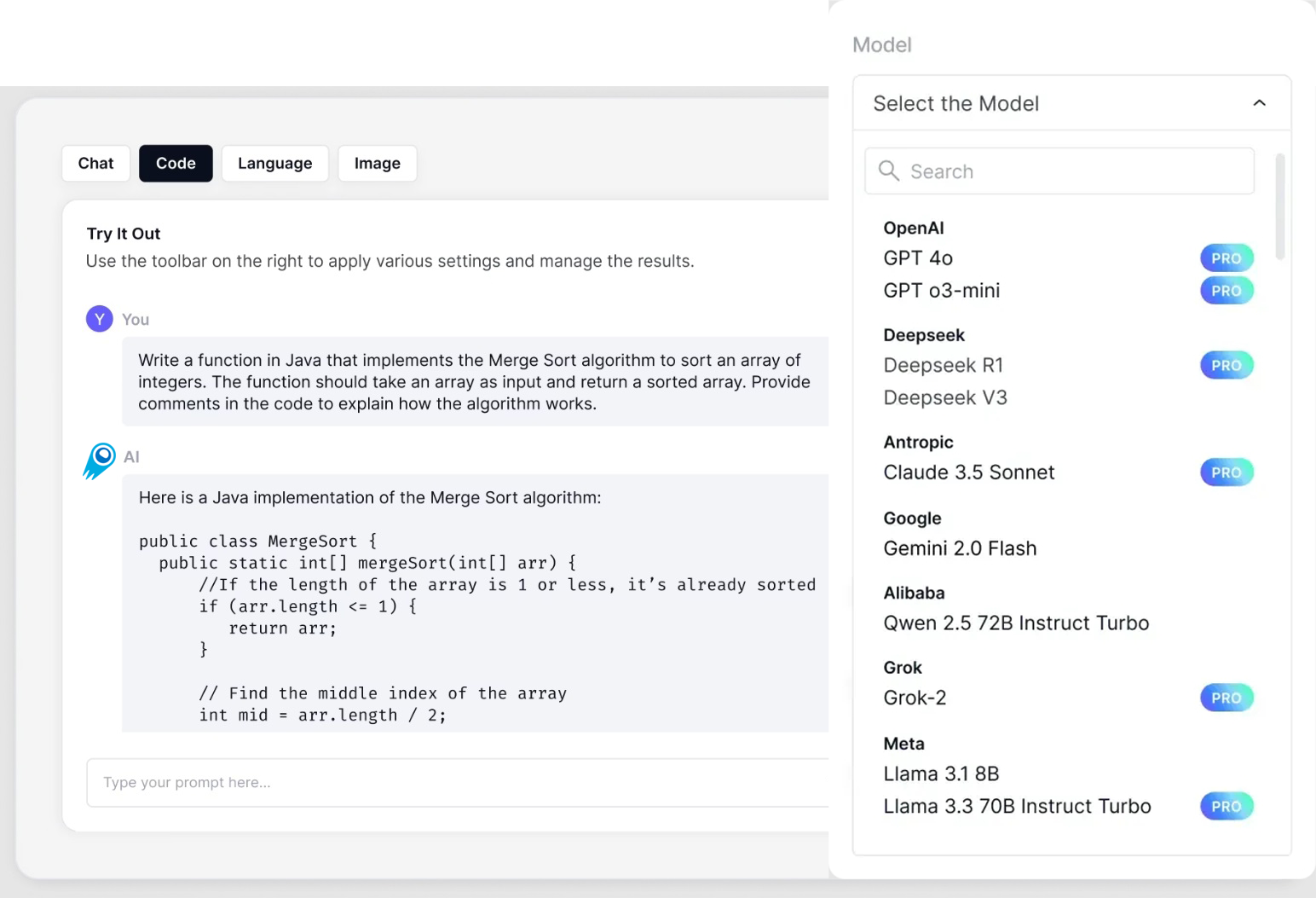

How to call this DeepSeek V3 API from our website

1.Log in to cometapi.com. If you are not our user yet, please register first

2.Get the access credential API key of the interface. Click “Add Token” at the API token in the personal center, get the token key: sk-xxxxx and submit.

3. Get the url of this site: https://api.cometapi.com/

4. Select the DeepSeek V3 endpoint to send the API request and set the request body. The request method and request body are obtained from our website API doc. Our website also provides Apifox test for your convenience.

5. Process the API response to get the generated answer. After sending the API request, you will receive a JSON object containing the generated completion.