Meta Llama 4 Model Series Full Analysis

What Is Llama 4?

Meta Platforms has unveiled its latest suite of large language models (LLMs) under the Llama 4 series, marking a significant advancement in artificial intelligence technology. The Llama 4 collection introduces two primary models in April 2025: Llama 4 Scout and Llama 4 Maverick. These models are designed to process and translate various data formats, including text, video, images, and audio, showcasing their multimodal capabilities. Additionally, Meta has previewed Llama 4 Behemoth, an upcoming model touted as one of the most powerful LLMs to date, intended to assist in training future models.

How Does Llama 4 Differ from Previous Models?

Enhanced Multimodal Capabilities

Unlike its predecessors, Llama 4 is designed to handle multiple data modalities seamlessly. This means it can analyze and generate responses based on text, images, videos, and audio inputs, making it highly adaptable for diverse applications.

Introduction of Specialized Models

Meta has introduced two specialized versions within the Llama 4 series:

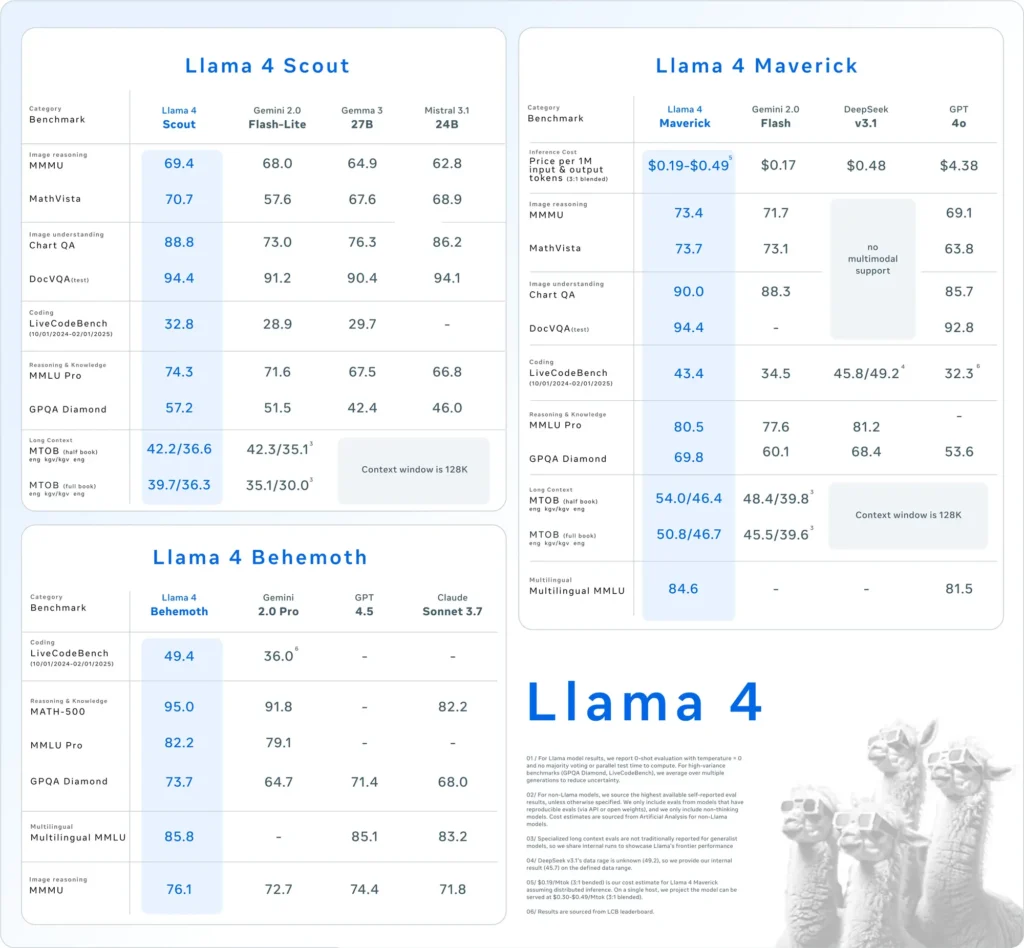

- Llama 4 Scout: A compact model optimized to run efficiently on a single Nvidia H100 GPU. It boasts a 10-million-token context window and has demonstrated superior performance over competitors like Google’s Gemma 3 and Mistral 3.1 in various benchmarks.

- Llama 4 Maverick: A larger model comparable in performance to OpenAI’s GPT-4o and DeepSeek-V3, particularly excelling in coding and reasoning tasks while utilizing fewer active parameters.

Additionally, Meta is developing Llama 4 Behemoth, a model with 288 billion active parameters and a total of 2 trillion, aiming to surpass models like GPT-4.5 and Claude Sonnet 3.7 on STEM benchmarks.

Adoption of Mixture of Experts (MoE) Architecture

Llama 4 employs a “mixture of experts” (MoE) architecture, dividing the model into specialized units to optimize resource utilization and enhance performance. This approach allows for more efficient processing by activating only relevant subsets of the model for specific tasks.

How Does Llama 4 Compare to Other AI Models?

Llama 4 positions itself competitively among leading AI models:

- Performance Benchmarks: Llama 4 Maverick’s performance is on par with OpenAI’s GPT-4o and DeepSeek-V3 in coding and reasoning tasks, while Llama 4 Scout outperforms models like Google’s Gemma 3 and Mistral 3.1 in various benchmarks.

- Open-Source Approach: Meta continues to offer Llama models as open-source, promoting broader collaboration and integration across platforms. However, the Llama 4 license imposes restrictions on commercial entities with over 700 million users, prompting discussions about the true openness of the model.

| Category | Benchmark | Llama 4 Maverick | GPT-4o | Gemini 2.0 Flash | DeepSeek v3.1 |

|---|---|---|---|---|---|

| Image Reasoning | MMMU | 73.4 | 69.1 | 71.7 | No multimodal support |

| | MathVista | 73.7 | 63.8 | 73.1 | No multimodal support |

| Image Understanding | ChartQA | 90.0 | 85.7 | 88.3 | No multimodal support |

| | DocVQA (test) | 94.4 | 92.8 | – | No multimodal support |

| Coding | LiveCodeBench | 43.4 | 32.3 | 34.5 | 45.8/49.2 |

| Reasoning & Knowledge | MMLU Pro | 80.5 | – | 77.6 | 81.2 |

| | GPQA Diamond | 69.8 | 53.6 | 60.1 | 68.4 |

| Multilingual | Multilingual MMLU | 84.6 | 81.5 | – | – |

| Long Context | MTOB (half book) eng→kgv/kgv→eng | 54.0/46.4 | Context limited to 128K | 48.4/39.8 | Context limited to 128K |

| | MTOB (full book) eng→kgv/kgv→eng | 50.8/46.7 | Context limited to 128K | 45.5/39.6 | Context limited to 128K |

How Does Llama 4 Perform in Benchmark Tests?

Benchmark evaluations provide insights into the performance of the Llama 4 models:

- Llama 4 Scout: This model outperforms several competitors, including Google’s Gemma 3 and Mistral 3.1, across various benchmarks. Its ability to operate with a 10-million-token context window on a single GPU highlights its efficiency and effectiveness in handling complex tasks.

- Llama 4 Maverick: Comparable in performance to OpenAI’s GPT-4o and DeepSeek-V3, Llama 4 Maverick excels in coding and reasoning tasks while utilizing fewer active parameters. This efficiency does not come at the expense of capability, making it a strong contender in the LLM landscape.

- Llama 4 Behemoth: With 288 billion active parameters and a total of 2 trillion, Llama 4 Behemoth surpasses models like GPT-4.5 and Claude Sonnet 3.7 on STEM benchmarks. Its extensive parameter count and performance indicate its potential as a foundational model for future AI developments.

These benchmark results underscore Meta’s dedication to advancing AI capabilities and positioning the Llama 4 series as a formidable player in the field.

How Can Users Access Llama 4?

Meta has integrated the Llama 4 models into its AI assistant, making them accessible across platforms such as WhatsApp, Messenger, Instagram, and the web. This integration allows users to experience the enhanced capabilities of Llama 4 within familiar applications.

For developers and researchers interested in leveraging Llama 4 for custom applications, Meta provides access to the model weights through platforms like Hugging Face and its own distribution channels. This open-source approach enables the AI community to innovate and build upon Llama 4’s capabilities.

It’s important to note that while Llama 4 is marketed as open-source, the license imposes restrictions on commercial entities with over 700 million users. Organizations should review the licensing terms to ensure compliance with Meta’s guidelines.

Build Fast with Llama 4 on CometAPI

CometAPI provides access to over 500 AI models, including open-source and specialized multimodal models for chat, images, code, and more. Its primary strength lies in simplifying the traditionally complex process of AI integration. By centralizing API aggregation in one platform, it saves users valuable time and resources that would otherwise be spent managing separate platforms and providers. With it, access to leading AI tools like Claude, OpenAI, Deepseek, and Gemini is available through a single, unified subscription.You can use the API in CometAPI to create music and artwork, generate videos, and build your own workflows

CometAPI offer a price far lower than the official price to help you integrate Llama 4 API, and you will get $1 in your account after registering and logging in! Welcome to register and experience CometAPI.CometAPI pays as you go,Llama 4 API in CometAPI Pricing is structured as follows:

| Category | llama-4-maverick | llama-4-scout |

| API Pricing | Input Tokens: $0.48 / M tokens | Input Tokens: $0.216 / M tokens |

| Output Tokens: $1.44/ M tokens | Output Tokens: $1.152/ M tokens |

- Please refer to Llama 4 API for integration details.

- For Model lunched information in Comet API please see https://api.cometapi.com/new-model.

- For Model Price information in Comet API please see https://api.cometapi.com/pricing

Start building on CometAPI today – sign up here for free access or scale without rate limits by upgrading to a CometAPI paid plan.

What Are the Implications of Llama 4’s Release?

Integration Across Meta Platforms

Llama 4 is integrated into Meta’s AI assistant across platforms such as WhatsApp, Messenger, Instagram, and the web, enhancing user experiences with advanced AI capabilities.

Impact on the AI Industry

The release of Llama 4 underscores Meta’s aggressive push into AI, with plans to invest up to $65 billion in expanding its AI infrastructure. This move reflects the growing competition among tech giants to lead in AI innovation.

Energy Consumption Considerations

The substantial computational resources required for Llama 4 raise concerns about energy consumption and sustainability. Operating a cluster of over 100,000 GPUs demands significant energy, prompting discussions about the environmental impact of large-scale AI models.

What Does the Future Hold for Llama 4?

Meta plans to discuss further developments and applications of Llama 4 at the upcoming LlamaCon conference on April 29, 2025. The AI community anticipates insights into Meta’s strategies for addressing current challenges and leveraging Llama 4’s capabilities across various sectors.

In summary, Llama 4 represents a significant advancement in AI language models, offering enhanced multimodal capabilities and specialized architectures. Despite facing developmental challenges, Meta’s substantial investments and strategic initiatives position Llama 4 as a formidable contender in the evolving AI landscape.